It's All within Reach

How do our brains understand what our eyes are telling us?

From the moment you open your eyes in the morning, your sense of sight helps you navigate and interact with the world. But how do our brains understand what our eyes are telling us? And how do we know what's surrounding us, where we can move, and what objects are within reach? Emilie Josephs, a PhD student at the Graduate School of Arts and Sciences, is discovering that the way our brain processes vision is even more complex than scientists initially thought.

Interview Highlights

"I found myself wondering, "Okay, I'm learning all these principles of how we understand a navigable environment. but how does this apply to my work desk?" I put that idea aside and three years later came back to it when I got to grad school. It looks like there's something different going on. And so now I'm trying to chase that down." - Emilie Josephs

"For a long time people in my field have kind of thought that, well, probably the same computations are used for navigable-scale space and for reachable-scale space. To some extent that might be true. But in my research recently, we've been finding that there might some additional computations that you need to recruit in order to behave in near space that you don't have to if you're behaving in far space." - Emilie Josephs

Full Transcript

Anna Fisher-Pinkert: From the Harvard Graduate School of Arts and Sciences, you’re listening to Veritalk. Your window into the minds of PhDs at Harvard University.

I’m Anna Fisher-Pinkert.

For the next few episodes, we’re going to talk about sensing. How we taste, smell, feel, and see the world. And, I’m going to confess something right now, I’m kind of klutz.

Case in point, the other day, I was cleaning up after a nice, relaxing breakfast. I washed my glass French press in the sink, and tried to place it back, gently, on the counter. Instead, I missed the counter entirely and smashed it on the edge of the dishwasher. There was glass everywhere. And that’s not even unusual for me. You can ask my wife. I’m always accidentally cutting myself with kitchen knives or dropping pens under my desk or losing my rings down the bathroom sink. And yes, I’ve been to the eye doctor and I have almost 20/20 vision. So if it’s not my eyes. . . what’s going on? Lucky for me, I work at Harvard – and there is someone here who is studying why people smash their beloved French presses. Well, not quite, but. . . I’ll just let her introduce herself.

Emilie Josephs: My name is Emilie Josephs. I do cognitive science. My research is looking at the parts of the brain that we think are involved in processing the visual world. And, specifically, I'm interested in how we make sense of the world within reach.

AFP: Emilie is a sixth year PhD student in psychology at the Graduate School of Arts and Sciences at Harvard University. Emilie isn’t looking at people’s eyes, she’s looking at the brain - and what happens when the brain has to take on the task of processing visual information. It turns out that even though we feel like visual information comes into our brains very naturally and seamlessly, there are different parts of the brain that do all sorts of detailed work to figure out what it is that we’re looking at.

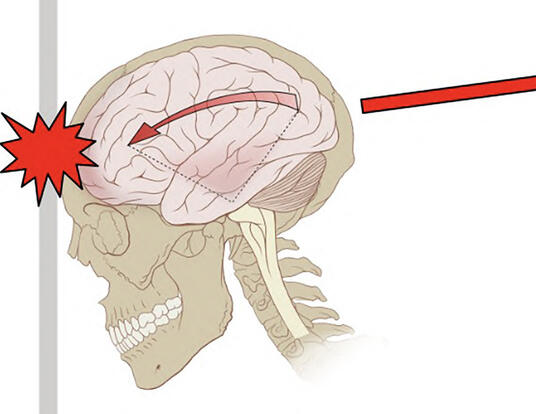

EJ: So over the last few decades people have found that there are specific regions that respond more strongly to certain kinds of visual input. So there are areas that respond most to faces, and we think they're specialized for face processing. There are areas like that for objects. Within there, there are regions specialized for processing tools, even, because they have particular behavioral relevance. So, that information seems to be processed in different parts of the brain.

AFP: Now, there’s a whole other region that responds to scenes – and that’s what Emilie studies.

EJ: When we say "scenes" in visual science, we mean not just outdoor landscapes -- I think that's what we think of first -- but also indoor rooms, and sort of urban landscapes as well. So any place where need to just move your body around, we call that a "scene." And so far, we've located three regions in the visual cortex that seem to be doing a lot of the work of representing these environments.

AFP: It turns out that our brains actually have to do a lot of different kinds of processing to understand where we are and how we can move through spaces without walking into a door or falling off a cliff. Each of those three areas of the visual cortex does something a little different. The first one is called the parahippocampal place area. Emilie took me through a kind of visualization exercise to help me access it, and you can do it too.

EJ: So if you just close your eyes and I tell you: Imagine that there's a horizontal surface stretching from your feet all the way out to the horizon. What kind of space do you feel like you're in, maybe?

AFP: Am I. . . like, maybe the beach?

EJ: Exactly. That's exactly what I was picturing.

AFP: Okay.

EJ: Every beach has that structure. But if you think about something like a kitchen: That's going to have countertops, right? And cabinets above your head. And that creates a really specific structure in the world as well. And it's really diagnostic. So what we think the PPA, the parahippocampal place area, is doing is decoding that that visual structure to the environment that you're in.

AFP: There’s another region called the occipital place area.

EJ: Recent work is starting to suggest that that region is involved in computing local navigational affordances. Which is just a fancy way of saying, "can I walk to the left of that couch or not." Given the world in front of me at this very moment, where can I walk?

AFP: Finally, there’s the retrosplenial cortex, which connects the visual information to what you have in memory.

EJ: So now I can walk out of this podcast studio, I can look around and I know that if I turn left. I know that if I turn left, I'll end up passing the stadium, and I know that eventually there'll be a bridge to cross over into Cambridge. And so that linking of the current view to what I know about the world, and the layout of the world, seems to be done in that area.

AFP: Okay, so that’s really your directional sense, to a certain extent?

EJ: It’s a little different than directional sense, which I read as, are you facing north or south, or soforth.

AFP: Is it like the way that I can walk around my house like with my head on my phone and I know exactly where the stairs are and I'm not gonna trip?

EJ: Yeah!

AFP: Okay, so I can see that's the bottom of the steps, which means the next thing that's going to happen is there's going to be some more steps.

EJ: It seems like it's maybe something like that. Yeah. It's keeping a template of the global environment in mind at the same time as what you're locally knowing and seeing about the world.

AFP: So there is a ton of great research out there about how we perceive scenes that we can navigate in. We are completely set on beaches and cliffs and hallways all of that. But, when Emilie learned about these scene-processing regions as an undergrad, she noticed that something was missing.

EJ: I found myself wondering, "Okay, I'm learning all these principles of how we understand a navigable environment. Okay, but how does this apply to my work desk?" I was typing on the computer one day and I was like, "Huh! How does this apply to this?" And I put that idea aside and three years later came back to it when I got to grad school, and just tried it out, and found out that wow actually these principles don't completely apply. It looks like there's something different going on. And so now I'm trying to chase that down.

AFP: Emilie decided to explore something that she calls a “reachspace.” Not the “navigable” spaces that we walk around in, but spaces where we need to reach out and manipulate objects to get things done. Let’s say you’re a surgeon – you might need to navigate a busy operating room to get to your patient. And we pretty much know how the brain uses vision when you’re trying to walk around the room without tripping or running into things. But once you’re standing in front of the operating table, with instruments laid out on a tray next to you – that’s your “reachspace.” And scientists still don’t totally know how your brain is processing vision when you’re in those spaces.

EJ: For a long time people in my field have kind of thought that, well, probably the same computations are used for navigable-scale space and for reachable-scale space. To some extent that might be true. But in my research recently, we've been finding that there might be some additional things, some additional computations, that you need to recruit in order to behave in near space that you don't have to if you're behaving in far space.

AFP: To understand how our brains process visual information in reach spaces, Emilie scans a lot of people’s brains trying to find out if different types of scenes generate activity in different parts of the brain. And since the fMRI machine isn't portable, so Emilie shows people pictures of different kinds of scenes to see their brains’ activity.

EJ: And the pictures that we show them in my case will be either scenes, or objects, or reachspaces And we just tell them: "Just watch."

AFP: So, imagine that you lie down in the tube, and the first thing that Emilie shows you is an image of a kitchen in a typical apartment. And your brain lights up like it just walked in the front door.

Your Brain (as played by an old-timey sit-com dad): Honey! I’m home! Aha, lots of vertical surfaces. Maybe those are some cabinets – I wouldn’t wanna run into those!

AFP: Next, you’ll see an object that belongs in that scene, like a tea kettle or a pan.

Your Brain: Firin’ up the old object recognizer. . . oh hey, a tool! That seems useful!

AFP: And finally, you’ll see reach space. Like a kitchen sink with a sponge and dirty dishes.

Your Brain: Oh hey, that’s different!

AFP: When Emilie takes the images of the brain responding to the scene, the object, and the reach space, she gets really interesting patterns of activity in the visual cortex.

EJ: We go back to our lab and we analyze it and we see, okay: Were there parts of the brain that were more active when reach spaces were on the screen? And were there parts of the brain that were more active when scenes are on the screen? And when we do that we find, yep, there are three scene regions. That's good. We knew that before -- confirming -- but we also find regions that are more active to reach spaces, and that's what we didn't know about before.

AFP: So are they subsets of the scene space? Or is it or is it totally new parts of the brain?

EJ: Good question. They are totally different from the scene areas. They are potentially overlapping in one case, but in other cases really separate. It's not the same system.

AFP: As you've been saying we need different computations to happen in the brain. Because if I'm approaching a room that looks like a kitchen I need to make sure I don't bump into that counter. But if I'm standing in front of the counter I need to make sure that I chop vegetables and don't chop my hand or throw the knife on the ground. And those are really, really different activities!

EJ: Super different. And different in what part of the visual world you need to pay attention to, how granular does that attention have to be? What parts of the body is that information relevant for? These are all completely different in those different tasks in navigating versus reaching.

AFP: So on a personal level I'm really interested in this research because I'm very clumsy. [laughter] So actually, this weekend I knocked my French press off the counter while I was doing dishes, and it broke in my dishwasher. So what is going on? What is supposed to be happening when I'm perceiving reachspace, and how. . . there has to be some sort of interaction between knowing where our bodies are and knowing what we can reach. So how does that work?

EJ: So we're still learning so much about what's going on in the brain. So what we do know, in part, is in order to make that reach movement, you need to calculate things like: Do I use my arm? Do I use my wrist? Do I do sort of a pinching motion, or a full hand kind of grip motion? How many fingers do I use? Should my hand be palm down or palm to the side? We know that all of those different dimensions are being handled. What we don't really know yet and what I'm hoping to find out in my research is: What role is visual system playing in giving the rest of the brain the information that it needs in order to figure those things out? And, as of right now, because my work is pretty new, we don't have a solid answer yet. We're still discovering that.

AFP: What would be helpful or useful in understanding reach space and how our brain processes that visual information better?

EJ: The work that I think needs to be done is to understand what are the different aspects of those spaces that matter for the brain. How is it that we can represent the different number of objects in a space? What are the visual cues that tell me whether this is an object that needs a little pinch grip or a strong full hand grip? Where in the brain is that happening? How are individual neurons representing that? What are the dimensions that they care about? What are even the features that matter? To date, we've thought that the kinds of computations that your brain can do to understand spaces that you can navigate in are the same as the ones that you can do to understand the space that you reach in. At this point, all we're trying to do is figure out: Is that true or not? That's where we're at! This is such a new science, that's where we are! And my work is showing that, in fact, that's not true. You need more specialized computations in order to visually make sense of reachable spaces.

AFP: So, it’ll be a few years before I know exactly which areas of my brain are epic failing to help me do the dishes. But, Emilie says there is hope for me.

EJ: There is a huge role for training in this whole system. If you think about people like mechanics, people like surgeons, these are people these are people who work in environments that are within reach. The mechanic is working on an engine bay. The surgeon is working on a patient. And each of those people can look at that environment in front of them and understand all the parts immediately, and understand which parts are safe to touch and which are not, and which parts are safe to intervene on, and what tools do I need to use to do it, and how do I need to hold it to do it successfully. I think there's a lot of room here for there to be the sort of natural variation that depends on what kind of experience you have. And I totally believe that it will change the representation in your brain of that space.

AFP: So we can change. We're not permanently bad at reach space or good at reach space and maybe different reach spaces are gonna work better for us.

EJ: Absolutely. My message of hope is that you can learn to use any reach space that you want.

AFP: And that’s quite a relief for me.

Next time on Veritalk, Big Hero 6 introduced us to lovable, huggable robots – but can robots ever really have the same sense of touch as human beings? That’s next time on Veritalk.

Veritalk is produced by me, Anna Fisher-Pinkert.

Our sound designer is Ian Coss.

Our logo is by Emily Crowell.

Our executive producer is Ann Hall.

Special thanks to Emilie Josephs and Phil Lewis, who voiced our brain. Emilie’s research is supported by the NIH Shared Instrumentation Grant Program.

Before I go, I want to tell all of the Veritalk listeners out there about another Harvard podcast that you should check out. It’s called the Harvard Religion Beat, and it’s a pop-up podcast examining religion’s underestimated and often misunderstood role in society. If you’ve ever wondered why mindfulness meditation went mainstream, or why white Evangelicals overwhelmingly support President Trump, or who put the “guilt” in “guilty pleasures” you should check out the Harvard Religion Beat in your favorite podcast app, or at theharvardreligionbeat.simplecast.com.

Logo by Emily Crowell

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast