Squish Goes the Robot!

Research at Risk: Since World War II, universities have worked with the federal government to create an innovation ecosystem that has yielded life-changing progress. Now much of that work may be halted as funding is withdrawn. Find out more about the threats to medical, engineering, and scientific research, as well as how Harvard is fighting to preserve this work—and the University's core values.

Can robots ever learn to feel? Our ability to perform delicate tasks, like giving a gentle hug or picking a piece of fruit, is something that robots can't yet mimic. Ryan Truby, an alum of the Graduate School of Arts and Sciences and the Harvard John A. Paulson School of Engineering and Applied Sciences has created bioinspired soft robots that can squish, stretch, and feel their way around the world - and they have the potential to change how we understand robotics.

Interview Highlights

"Octopi and other cephalopods are almost entirely soft-bodied organisms. And it is those soft bodies that enable things like an octopus to be able to swim around to be able to crawl through nooks and crannies to be able to have incredibly complicated manipulation of locomotion capabilities." - Ryan Truby on the genesis of one of his bioinspired soft robots.

"Moving forward, if we can give these soft robots very complex and sophisticated means of perception, we're going to learn a lot - not just about soft robots but robotics in general and possibly even how we interpret and see the world." - Ryan Truby, on the future of soft robots.

Full Transcript

Anna Fisher-Pinkert: From the Harvard Graduate School of Arts and Sciences, you’re listening to Veritalk. Your window into the minds of PhDs at Harvard University. I’m Anna Fisher-Pinkert.

In this Veritalk series, we’re talking about sensing: How we taste, smell, feel, and see the world. Last week, we talked with PhD student Emilie Josephs about what happens in our brains when we visualize things within reach.

Emilie Josephs: How is it that we can represent the different number of objects within a space? What are the visual cues that tell me that this is an object that needs a little pinch grip or a full-hand grip?

AFP: But what happens next? What happens when we need to calibrate our sense of touch to interact with the world? When we’re babies, we do a lot of things by feel. We learn that a hard grip will crush a banana, but a soft grip won’t let you hold tight to your teddy bear.

But this week, we’re not going to talk about how human babies learn to feel. We’re going to talk about how robots are learning to interact with the world through touch. And honestly, most robots are still kind of like babies - squishing things they aren’t meant to squish, dropping things they should be holding tight. And that means that most robots aren’t that great for certain, delicate kinds of tasks

RF: So if you think about maybe packing groceries or being on a farm, traditional rigid robotic grippers might squish important produce. If we think hospital settings, you wouldn't want a robot really pinching you. You need this ability to adapt, to gently manipulate and move around objects.

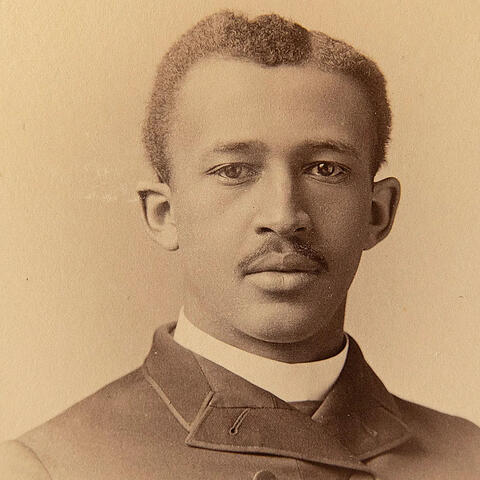

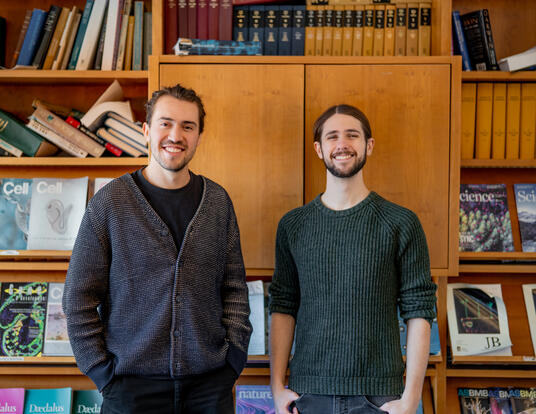

AFP: That’s Ryan Truby. He studied at the Graduate School of Arts and Sciences and the Harvard John A. Paulson School of Engineering and Applied Sciences. In 2018 he graduated with a PhD in Applied Physics.

RT: I did my PhD with Professor Jennifer Lewis who is also at the Wyss Institute. My PhD dissertation looked at developing new materials and manufacturing strategies for creating entirely new types of machines that we call soft robots.

AFP: Ryan is now just down the street doing a postdoctoral fellowship at MIT. He’s interested in soft robots - these squishy, bendable robots that are made of “soft matter.”

RT: Soft robots are incredibly interesting because they are comprised of materials that are squishy. They're deformable, and they'll recover back from their shape. And this includes everything from rubbers, which like a rubber band are very stretchy and elastic, to different types of soft plastics and polymeric materials. Soft matter includes, actually, biomaterials, so the same materials in your body. And the reason that we're interested in creating robots out of these materials is to really take a bioinspired approach to robotics design.

AFP: Living creatures are a huge source of inspiration to roboticists. I can smush my nose down with my finger, and it will pop right back up into place when I’m done. This “deformability” is really important when trying to create robots that can move in new, more flexible ways.

RT: Robots suffer from a number of challenges. It's hard for robots to adapt to environments that are very complex, or unknown, or even dynamic to these systems. Robots do not exhibit sort of a flexibility in their behavior. They suffer from power and computational challenges. But living systems do all these things just fine and they outpace and perform robots in every way.

AFP: Take that, robot overlords!

RT: We don't want traditional robots really coming into our way, posing sort of a threat or harm or safety concern. Soft robotics open up this really fascinating opportunity for them to engage their environments - to interact with us directly.

AFP: Imagine a car assembly line robot. It’s a pretty good robot, actually - as long as it only encounters certain types of controlled situations, like a factory floor. But if you dropped it in a hospital, and asked it to pick up an elderly patient, you would. . . have some issues.

RT: And one of the challenges, but key needs, in soft robotics is trying to give these systems tactile, human skin-like receptors or sensing capabilities. Giving these sorts of robots proprioception: How am I moving? What is my shape? What is my state? And so a lot of it does come back into: How do I rethink materials that could be conductive, that could still be stretchable and deformable, but open up new opportunities for these soft robots to engage the world around them?

AFP: Instead of bumping into things like a juiced up Roomba, soft robots can squeeze and stretch perform delicate tasks. An example of this are soft robotic fingers that Ryan designed as a part of his PhD work with Jennifer Lewis. Three of these soft robotic fingers dangle from a metal rod, kind of like a claw in a claw machine. And, together, these fingers can perform all sorts of human-like grabs and grips. And the way they move is very different from their hard robot cousins.

RT: So, typically, when you have a sort of robotic manipulator operating on an assembly line or a packaging facility, this robotic finger is going to have very discrete simple moves that it can do. And these gripper are normally prepared, or they know what it's about to grasp. The thing about these soft fingers is that when they bend, sure we can prescribe that bending, but their deformation and their ability to to be compliant allows it to wrap around various objects. One of the big enabling features of these soft fingers is that they really open up the different varieties of objects that can be held within its grasp.

AFP: Now, these robot fingers don’t just bend - they also inflate. And that means that they have another way to deform their shape to wrap around an object.

RT: Because we're just going to, in this case, inflate these inflatable fingers around an object, it's going deform and sort of adapt to whatever that object is - I don't have to perfectly plan out its motions.

AFP: And, each finger is laced with tactile sensors that allow it to know if it has made contact with an object, and how much contact pressure it’s generating. So, if it tries to pick up my paper cup, it will know just the right amount of pressure to apply so that the cup won’t get crushed.

RT: If you just sit at the table and act like you're gonna reach out for something, you sort of have this "C" shape in your hand. A lot of current soft robots, before we started this work, kind of have an "O" shape when they grasp. You don't grasp things in the world with an O shape. You do it first with a "C" and then clasp around. So what embedded 3D printing allowed us to do was to create different networks, different distributed sensors, so that we could go in a soft gentle way - kind of a probing grasp: "Hey have we touched this?" And then once we had, per our algorithm, made decent contact, we could then robustly grab and lift that object up. And that simple algorithm allowed us to grasp again everything from a bag of chips to a stuffed animal, different food items, all different types of things, in a way that a gripper which was more traditional or rigid wouldn't have been able to do.

AFP: The result of all this is a robot that kind of grasps at the world a little bit like a toddler does. The three bendy, inflatable fingers adjust and stretch around an apple, a ball, a teddy bear - using sensory feedback to adjust that grip.

Video courtesy of the Harvard John A. Paulson School of Engineering and Applied Science.

RT: This is hugely enabling for robots to not have to think a whole lot about planning a move or grasp. I just inflate, grab, and that sort of embodied intelligence in the deformability and structure of this finger allows it to do just much more in a more adaptable way than traditional robots can.

AFP:The robotic fingers are reacting to the things they encounter in the environment, sort of the way living beings do. But there’s no real reason that these fingers have to look like human fingers. They could look like prehensile tails, or . . . tentacles. In fact, Ryan and his collaborators at Harvard, professor Robert Wood and postdoc Michael Wehner actually made an example of a soft robot called the Octobot. It’s a little bit bigger than a double-stuff Oreo, and totally see-through, squishable, and deformable. Ryan and the team 3D printed this little guy to show what soft robots could potentially do. The Octobot has no hard components, no computer, no battery - so Ryan and the team had to come up with new ways to power this soft robot.

RT: And so what we decided to do was to really utilize a chemical fuel - specifically a minor propellant called, well, hydrogen peroxide. It's not the hydrogen peroxide you would grab at the store. This is essentially rocket fuel-level concentration. But what's really exciting about something like hydrogen peroxide is it is a simple liquid. Really, if you just dilute it it becomes pretty safe. But it's also very reactive. And so in the Octobot we have little fuel reservoirs here in the head of the system and we can pressurize this system to force it into this little microfluidic computer. But downstream of that computer is a set of catalysts. And just by barely placing that liquid fuel on the catalyst it just explodes. Not literally but decomposes into this high gas that's pressurized and can inflate the little actuators here on Octobot.

AFP: So, when Ryan says “actuators” he means the little Octobot arms, that curl and uncurl as a result of these chemical reactions. So why would we want a robot that could move around like an octopus?

RT: Octopi and other cephalopods are almost entirely soft-bodied organisms. And it is those soft bodies that enable things like an octopus to be able to swim around to be able to crawl through nooks and crannies to be able to have incredibly complicated manipulation of locomotion capabilities.

AFP: Going hardware-free isn’t just great for that deformability we talked about earlier - it also means that the Octobot isn’t dependent on batteries or wires or plugs.

RT: One of the problems that I mentioned with traditional robots is power. So if I have a chemically-powered system like the Octobot, then I could begin thinking about ways of having this system go out and scavenge and harvest its own energy and then using that for autonomous functionality.

AFP: You know, I keep thinking . . . we are actually kind of multiple fluidic and electrical systems that have to go out and scavenge for fuel. So at what point are we talking about an organism versus a machine?

RT: So now you're [laughter] now you're getting into what I want to do with my own research group one day. With robotics we've seen so much success with computation and artificial intelligence fueling sort of . . . the ability for robots to stand poised to have tremendous impact in our society. All the while, and the reason I loved working with Jennifer Lewis here at Harvard, was that we've also, in this age, created so many advances in the way that we can control and program and manufacture materials - specifically soft ones. I do think that here in the next 5, 10 years you're going to begin to see a convergence of us being able to control materials over many length scales with a variety of compositions, forms, you name it! Where at some point we can begin to blur that distinction between material and machine and begin to think about ways in which we could create materials with robotic capabilities and therefore not just have tremendous consequences on soft robotics - not just robots - but totally rethinking what a robot actually is.

AFP: So instead of there being components that are gears and pulleys and levers and switches, the components could be. . .

RT: True artificial muscles. Systems that could interface with the human body systems that. . . we haven't even thought about yet because it's going to change, I think, that much, the way we think about what a robot is and can be.

AFP: So how different is the way that soft robot, at this point in its development, how different is the way that these robots experience the world, touch the world, senses things - how different is it between them and us?

RT: So that's a totally open question. And what's really exciting, and this actually plays into some of the work that I'm doing at MIT, is. . . If we do have these systems that are so compliant, so deformable, can have more bio inspired means of sensing, it does suggest that learning might also play a role here. How does artificial intelligence help these systems which are soft and squishy understand that perception?

In robotics, traditionally, we want to try to linearize this rigid system. We want to control this thing very well. And with these soft materials it gets really hard. Everything is very non-linear. And so I actually think, and a lot of people in the field working in this space, are turning to AI and looking at how does the human brain and, you know, our cognition, play a role in how we perceive and touch and feel the world, and trying to translate some of these lessons into, specifically, soft robotics. And so it's an open question, but there's a lot of similarities. And I think that, moving forward, if we can give these soft robots very complex and sophisticated means of perception, we're going to learn a lot - not just about soft robots but robotics in general and possibly even how we interpret and see the world.

AFP: So, the next robots won’t just look more like us, they might perceive more like we do. And that makes me think that the next generation of robots might be more like the Replicants in Blade Runner, or the cylons on Battlestar Galactica… or just a helpful squid that helps pack our groceries. In any case, it will be exciting to see what’s next.

Next week on Veritalk, we explore two senses, taste and smell, with some very, very, hungry critters.

Jess Kanwal: I like to think of them as voracious eating machines.

AFP: Tune in next week for more Veritalk. You can see the Octobot and the robotic fingers in action on our website at gsas.harvard.edu/veritalk.

If you liked this story, I think you’ll really like learning about how bird feathers are inspiring two PhD students to create structural colors in their lab. You can find that in our very first series on Plumage. Go ahead and treat yourself to another episode right now.

We make this show because we love talking to really smart people who are finding new ways to understand the world. If you like listening to this show as much as we love making it, rate us and leave us a review on Apple Podcasts.

Veritalk is produced by me, Anna Fisher-Pinkert.

Our sound designer is Ian Coss.

Our logo is by Emily Crowell.

Our executive producer is Ann Hall.

Special thanks this week to Ryan Truby and Jennifer Lewis. Ryan Truby’s research is supported by the Wyss Institute for Biologically Inspired Engineering, National Science Foundation through the Harvard MRSEC, the National Science Foundation Graduate Research Fellowship and the Schmidt Science Fellows program, in partnership with the Rhodes Trust.

Logo by Emily Crowell

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast