Putting Common Sense Back in the Driver’s Seat

For De Freitas, emphasis on ‘trolley problem’ is wrong track for effort to build safer driverless cars

In one of the many memorable moments from the television show The Good Place, Michael, a magical being, transports the character Chidi Anagonye from his neighborhood into the driver’s seat of a moving trolley that’s on a collision course with five track workers. If Chidi switches tracks, the five will be spared, but one other worker will die. What should he do?

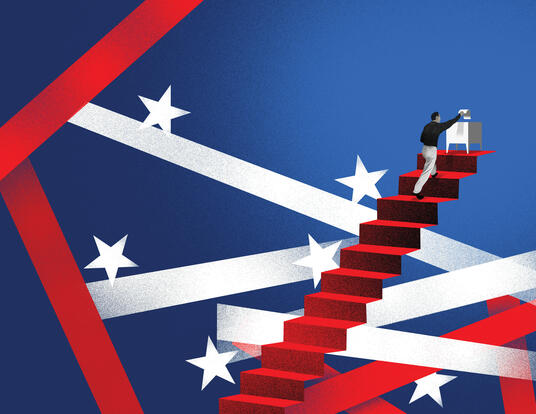

The scene is a dramatization of a thought experiment—the “trolley problem”—that ethicists have used for decades to explore choice and morality. With the development of artificial intelligence (AI), social scientists, moral psychologists, and philosophers have in recent years brought the dilemma to bear on the development of driverless cars, also known as autonomous vehicles (AV). But Julian De Freitas, a PhD student in psychology at Harvard’s Graduate School of Arts and Sciences, says that scholars may be overemphasizing the trolley problem at the expense of understanding the decisions that drivers—machine or human—encounter every day.

“Some social scientists like to focus on the trolley problem because it simplifies moral choices and makes contrasts easy,” De Freitas says. “I think people who are used to thinking along these lines see autonomous vehicles as another venue where they can apply the approach. But if we want to make safer autonomous vehicles, what we really need to understand is the ‘common sense’ that goes into everyday driving. There are plenty of ethical issues there too.”

The Dominant Paradigm

When making the case for autonomous vehicles, manufacturers emphasize that the technology can make personal transportation safer, in part because AVs are controlled by artificial intelligence. AI can’t eliminate the potential for AVs to cause harm, however, which raises ethical questions that scholars have addressed by turning to the trolley problem.

The most famous study, “The Moral Machine Experiment,” used the dilemma as part of an online survey that gathered over 40 million responses from 233 countries around the world. Its findings, published in the October 24, 2018, issue of the journal Nature, were of three strong preferences: to spare human lives, to spare more lives, and to spare young lives. The authors of the study based at MIT, recommended that these preferences “serve as building blocks for discussions of universal machine ethics, even if they are not ultimately endorsed by policymakers.” Their way of framing the problem became the dominant paradigm among their colleagues.

“The dilemma was deliberately constructed to create a tradeoff between two options, both of which result in someone inevitably getting killed,”

A member of the Vision Sciences Laboratory in Harvard’s Department of Psychology, Julian De Freitas focuses on ethics, social coordination, and the self. He’d never given much thought to AVs before recent alumnus Sam Anthony, who received his PhD from Harvard in 2018, returned to the lab to give a talk on life after graduate school and his new AI company, Perceptive Automata. The two spoke afterward and discovered that they were both confounded by the focus on the trolley problem.

“The dilemma was deliberately constructed to create a tradeoff between two options, both of which result in someone inevitably getting killed,” De Freitas says.” “Sam and I agreed that true trolley problems were very unlikely to occur on real roads, so we were perplexed that they were receiving so much attention in the growing literature on AV ethics.”

De Freitas and Anthony decided to take on the prominence of the dilemma in the development of AVs. Joined by one of the Vison Lab’s principal investigators, Professor George A. Alvarez, and the roboticist Andrea Censi, they wrote a scholarly paper laying out their criticisms of the trolley problem, which they called too contrived and impractical to inform decisions about real world transportation safety.

“Our main point about why the trolley problem is the wrong way to design AVs,” De Freitas says, “is that when we think about the best drivers we know today, that is, human beings, we don’t teach them to drive by saying whom they should harm or favor. Rather, at every step we tell them to avoid harm.”

Common Sense Ethics

The trolley problem, De Freitas points out, strips away all uncertainty from outcomes. In “The Good Place” example, there is a 100 percent probability that five people will die if Chidi stays on track, and a 100 percent probability that one person will die if he switches. In reality, drivers have many different options when disaster looms: swerve, honk the horn to warn those in the way, slam on the brakes.

Moreover, De Freitas continues, no driver—human or AI—can be completely certain of the outcome of their actions. They are unlikely to know in the moment whether they’re in a true trolley-problem-like situation. (Is it truly the case that the only options are to hit the jaywalking pedestrian or the oncoming vehicle, or are there ways to minimize the chances of either?)

Even if an AV did have only two options and clearly understood their implications, De Freitas says, it is unlikely that the options would neatly map onto two morally-relevant choices, such as whether to harm one person or five. Further, if the AV is really so constrained that it only two actions left, it’s unlikely that it has enough control to fully execute the actions.

I see our paper as a call to arms to get people who have social science skills to think about machine ethics problems writ large. - Julian De Freitas, PhD student in psychology

“How could you possibly hope that a vehicle could use ethical principles to solve dilemmas when you can’t even detect when you’re in a dilemma?” he says. “It’s a very risky way to engineer a vehicle.”

According to De Freitas, the objective for AV designers ought to be the same as for a good driver’s education instructor—to produce a driver who will avoid harm whenever possible. To meet that goal, programmers need to focus on real situations on real roads—not online reactions to imagined scenarios.

“Think about avoiding an obstacle in the road,” he explains. “We try to maneuver around it. We don’t do so recklessly. We’re aware of violating other rules of the road. That situation is ethically relevant, but it’s much more valuable for everyday driving than the trolley problem.”

De Freitas hopes this critique of the trolley problem leads to a “common sense” ethics test that includes more realistic scenarios—both low and high stakes—with tradeoffs between safety and compliance and decisions made with varying degrees of certainty. His goal is both to advance his field and to support the development of safer AVs.

“I see our paper as a call to arms to get people who have social science skills to think about machine ethics problems writ large,” he says. “People are working on engineering problems, but the ethics challenges are why we don’t have AVs on the streets right now. The trolley problem is just one interesting speck in the big picture.”

Photos by Tony Rinaldo

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast