Seeing How We See

Fenil Doshi, PhD Student

Research at Risk: Since World War II, universities have worked with the federal government to create an innovation ecosystem that has yielded life-changing progress. Now much of that work may be halted as funding is withdrawn. Find out more about the threats to medical, engineering, and scientific research, as well as how Harvard is fighting to preserve this work—and the University's core values.

Fenil Doshi is a PhD student in the Department of Psychology and a graduate fellow at the Kempner Institute. He discusses his research on visual representation, its practical applications today, and how he went from aspiring game designer to scientist at Harvard Griffin GSAS.

Filling the Gaps in Our Vision

Have you ever wondered how we can look at a jumble of colors, textures, and shapes and instantly recognize a friend's face or a familiar object? My research delves into this marvel of vision—how our brain transforms the sensory information that hits our eyes into a vivid and meaningful depiction of the world around us. This mental image helps us make sense of our surroundings, navigate through them, and interact effectively. Specifically, I explore how the brain identifies, computes, and pieces together visual information to arrive at an early representation of an object, which lays the foundation for our intricate visual perceptions.

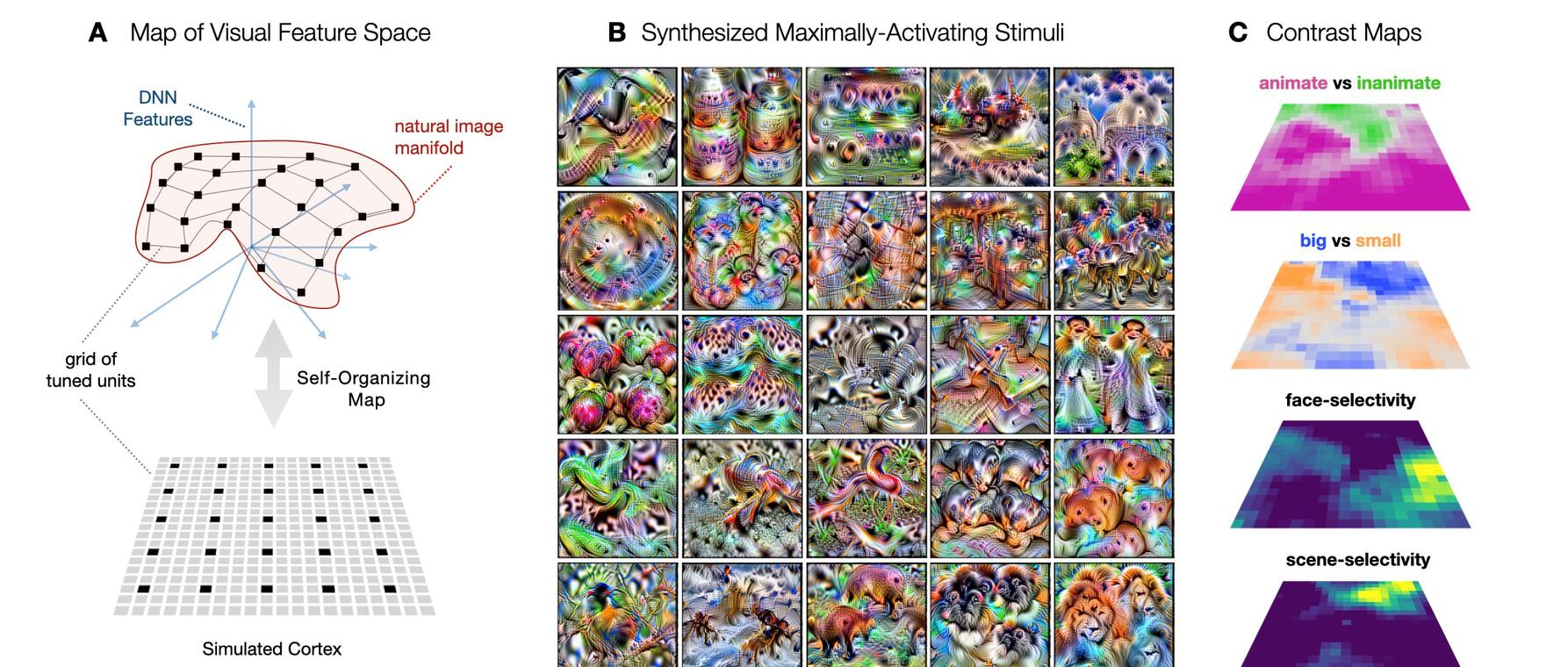

In one of my principal projects, I explored a computational model that simulates the way our brain spatially organizes the visual world that we experience. By integrating rules that resemble our brain's connectivity, we've uncovered insights into how and, more importantly, why our brain represents both large-scale information that is based on characteristics like the size and animacy of an object as well as categorical information like faces and landscapes. Interestingly, our findings suggest that the brain represents these objects in a shared unified mental space, a slight shift from the previously believed isolated modules that reflect specialized processing.

Another facet of my research seeks to understand how we group smaller chunks of visual information to form holistic shapes and objects, drawing comparisons between human and artificial vision. This work is paving the way to decipher the intricate code we and our artificial counterparts use to interpret the world visually. For example, picture a toaster oven covered in cat fur. An artificial vision system gets confused and registers it as a cat, misled by the furry texture. In contrast, you and I would still see it as a toaster oven. This thought experiment sheds light on how our biological vision is more sophisticated and nuanced than current technology.

By bringing together insights from neuroscience, cognitive science, and computer science, we can identify and refine the shortcomings in machine vision. This cross-disciplinary approach is crucial for advancing technology to perceive the world more like humans do, bridging the gap between natural and artificial intelligence.

Game Developer to Grad Student

My research journey began in my undergraduate years, when I studied computer science hoping for a career as a video game designer. However, in my final semester, I found myself immersed in a different kind of research at Brigham and Women’s Hospital using computer vision to analyze cell structures. Then, in 2018, I attended a seminar at NYU that explored how computer vision can be leveraged to understand human perception. It changed my course. There, the fusion of artificial intelligence with neuroscience and cognitive science, aimed at deciphering the brain and mind, captivated me. I was hooked by the potential to blend these fields, leading me to delve into cognitive science research. It started with exploring how humans intuitively understand the physics that constrain the world around us and later looked at how information bottlenecks manifest in high-level cognition. This newfound passion ultimately guided me to pursue a PhD in mid-level visual perception at the Vision Science Lab, a path I’ve found even more fascinating than game design.

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast