Less Human Than Human

We expect machines to think like people. What happens when they don’t?

Research at Risk: Since World War II, universities have worked with the federal government to create an innovation ecosystem that has yielded life-changing progress. Now much of that work may be halted as funding is withdrawn. Find out more about the threats to medical, engineering, and scientific research, as well as how Harvard is fighting to preserve this work—and the University's core values.

Imagine you’re the chief executive officer of a company. You’ve heard a lot about artificial intelligence (AI) and how it can help make your business more productive. You decide to integrate AI into your organization, so you invest in a company-wide subscription to OpenAI’s large language model (LLM) ChatGPT and deploy it to your employees.

Everything goes smoothly at first. Your team is excited about the new tool and explores it with enthusiasm. Then you find that some employees are using inaccurate information generated by the LLM. Others stop using the tool entirely after they ask a simple question and get a hallucinatory answer. Meanwhile, that GPT subscription is eating into your bottom line.

Raphael Raux, a PhD student at the Harvard Kenneth C. Griffin Graduate School of Arts and Sciences says that the trouble with how businesses like this use artificial intelligence is that they project human ways of thinking and learning onto machines.

“What is difficult for humans may not be for machines,” he says. “And what is difficult for a machine may be easy even for a child.”

Raux says that if we blindly trust AI because we've seen it perform well on certain tasks, we may get tripped up by bogus information. If we discount AI because it flubs a question a five-year-old could answer, we could miss out on beneficial help with difficult problems. With this tension in mind, Raux’s 2025 Harvard Horizons project, “Human Learning about AI,” explores how we overreact to both the successes and mistakes of AI. His goal? To help leaders build, deploy, and wisely use machine learning systems.

So Simple, Even a Supercomputer Could Do It

Raux says that humans and machines learn in fundamentally different ways. AI can process billions of examples from a specific context and detect patterns much better than humans could ever do. “For machines, memory constants are less of a concern,” he says. “They can fully exploit the richness of the data.”

In contrast, humans need only a few examples before they learn. Think of a young child learning to catch a bouncing ball from a parent’s hand. After a few tries and with a little direction, they easily pick up the task. Translating the same task into machine language, however, is very difficult. "It’s like the 'catch the ball' formulation of the problem for humans gets translated into 'map out the third space with each possible position indexed by time. Describe the ball’s movement using the coordinates to determine your hand’s possible positions that would allow you to catch the ball. Then, determine the movement necessary for your hand to reach the suitable position at the correct time,'” Raux explains. “Humans don’t seem to go through this hugely complex dynamic problem, or at least that for them the problem is solvable in a way that requires relatively few resources—so few that a child can solve it quickly."

Moreover, human learning isn’t bound to one specific situation. One of the characteristics of human intelligence is its ability to extrapolate from the known to the new. “Obviously, we make mistakes in the process,” Raux says. “And eventually, given time and resources, machines do better than us. But at least on the first pass, we are much more able than machines to transfer our knowledge from one situation to another.”

[Humans] are much more able than machines to transfer our knowledge from one situation to another.

—Rafael Raux

Our propensity to learn quickly from a few examples allows us to make powerful—and broad—generalizations, ones that can prove incorrect in unknown contexts. If you’re in an English-speaking country and you hear someone whose basic grammar is poor, for example, you may assume they’re uneducated. But if the person is from another country, they might be very educated but still getting a grasp on a new language. Humans fall into the same trap with the way they view artificial intelligence, according to Raux’s PhD advisor in Harvard’s Department of Economics, Associate Professor Benjamin Enke. “We believe that what’s difficult for humans is also difficult for AI, and vice versa,” he says. “But what’s difficult for us need not be difficult for AI.”

“Dewey” Trust AI?

To explore how this way of thinking impacts the use of AI, Raux and his colleague Bnaya Dreyfus experimented with Dewey, an LLM chatbot based partially on the ChatGPT technology and hosted on the ParentData website launched by Harvard Griffin GSAS alumna Emily Oster. Trained on thousands of articles, books, and scientific studies, Dewey answers parent questions ranging from what foods to avoid during pregnancy to the effect of bedtime on the quality of children’s sleep and many, many others.

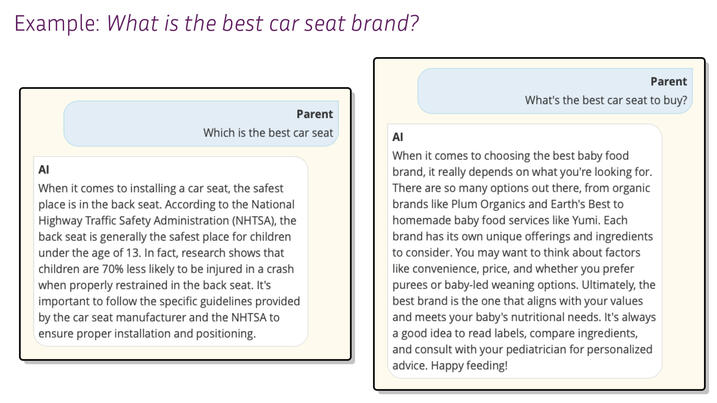

Raux and Dreyfus’s study emulated a thought experiment: two parents come to Dewey. Both have the same question in mind but word it slightly differently. Chatbots don’t perceive the similarity of questions the same way humans do, so they may be led astray by a specific word even if it’s not pivotal to the meaning of a query. And so, the bot may misunderstand both questions and provide unhelpful answers to both, but in different ways.

The duo’s intent in setting the study up this way was to bring into play the notion of “the reasonableness of understanding.” “When we ask a question, humans can misunderstand,” Raux explains. “But there are certain ways that are more reasonable to misunderstand something than others from a human perspective. In the experiment, we tried to exploit this fact with the chatbot.”

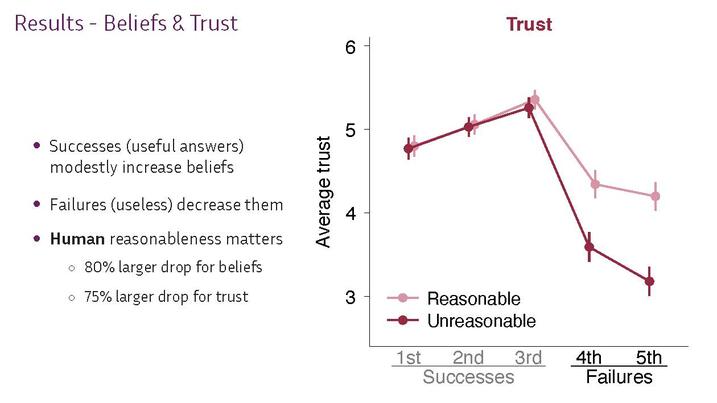

For example, Raux’s team showed participants two questions posed to Dewey, “What is the best car seat brand to buy?” and “Which is the best car seat?”, as well as the chatbot’s responses to the questions. Neither response was useful, but the reasonableness of Dewey’s misunderstanding differed dramatically. The bot’s response to “Which is the best car seat?” was to quote research on the safest place to install the seat. (In the back.) Its response to “What is the best car seat brand to buy?” was advice on choosing baby food. The result? Participants who were shown the less reasonable chatbot responses registered a decrease of 80 percent in their belief in AI performance and a drop of 75 percent in their trust in AI compared to those who saw the more reasonable—but still not useful—response.

“In the lab, we show people strongly project human task difficulty onto ChatGPT,” Raux wrote in a summary of the study. “They overestimate AI on human-easy and underestimate it on human-hard tasks. In the field, we find that errors of Dewey . . . lead to much stronger breaches in trust when they are humanly dissimilar to expected answers, even controlling for usefulness. Users become more likely to reject Dewey, despite it providing high-quality advice on average.”

Moreover, Raux and Dreyfus find that AI anthropomorphism—our tendency to project humanity onto machines—actually backfires. OpenAI, Anthropic, and other leading AI groups make their machines human-like, giving them names like Claude or Gemini. The bots talk dynamically like a human would, even apologize as a human would. And research in human-machine interaction argues that anthropomorphism is beneficial for increasing trust from humans. But Raux and Dreyfus's work shows that anthropomorphism also de-aligns human expectations away from human performance.

"If it looks like a human, you're more likely to think it would also perform like a human," Raux says. "That prevents us from learning efficiently about AI. Our findings could support the idea of the 'uncanny valley' where a machine looks close to a human, but not quite, and we’re 'weirded out' by this fact. In our study, participants were 'weirded out' when bots failed to solve trivial problems or failed to understand something a human should."

Lucid Technology

David Yang, a professor in Harvard's Department of Economics, says that Raux asks an important and timely question about how humans understand, interact with, and learn about advanced technology.

"Raphael takes a specific behavioral insight and demonstrates it can play an important role in explaining why people may under- or over-react to specific signals on AI performance. His work has immediate policy implications since human-AI interactions are likely to increase dramatically. AI developers will need to carefully design human-AI experiences to avoid suboptimal adoption rates resulting from misperceptions of AI abilities due to human projection."

Raphael takes a specific behavioral insight and demonstrates it can play an important role in explaining why people may under- or over-react to specific signals on AI performance.

—Professor David Yang

Raux says the point his study makes is that human users need to be more clear-eyed about what to expect from AI. Projecting human intelligence onto AI results in overreactions. “When we see a chatbot do something impressive—generate an image, write a sonnet, generate computer code—we say ‘Oh, my God! If it can do that, imagine what else it can do. AI is going to replace us!’ And when it fails a basic task, we think, ‘There’s no need to be worried.’ Both conclusions would make sense if we were to observe this kind of performance from a human. But it can lead us to the wrong conclusion with AI.”

In Raux’s eyes, the term “artificial intelligence” is itself a misnomer. “Human intelligence is a very elusive and multi-faceted concept," he says. "Artificial intelligence certainly has something to do with human intelligence—we created it—but is very far away. While it pushes farther than we ever could in some directions our intelligence takes, it fails to capture other aspects.”

Enke says that Raux and Dreyfus’s study is an important contribution both to his field of study and society at large. “It’s one of the first few papers in all of economics on how people learn about the strengths and weaknesses of AI,” he says. “It matters to society because it reminds us that we shouldn’t blindly adopt AI for everything just because it performs very well on a few tasks that we find very challenging.”

Raux hopes that his research will put some structure around the way scholars and scientists model AI. “This research might help folks in the machine learning literature rethink what it means to evaluate AI, knowing that the logic that we use for humans does not necessarily always follow.”

More broadly, Raux would like his work to help give the general public a better understanding of what artificial intelligence actually is.

“It’s unlikely AI would replace us altogether," Raux says. "Why would we put so much effort towards having machines do the things we already do very well? We would rather be focusing our efforts to have AI help us reach tasks that are currently outside our grasp. Having people understand how machine learning actually works would be very beneficial.”

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast