Through AI, New Dimensions in Physics

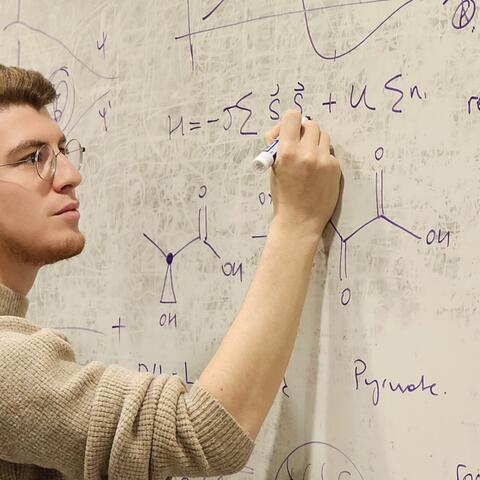

Aurélien Dersy, PhD Student

Aurélien Dersy is a sixth-year PhD student in physics at Harvard Griffin GSAS whose work straddles high-energy theoretical physics and artificial intelligence. A native of Switzerland, Dersy uses large language models—like those behind ChatGPT—to accelerate and deepen our understanding of the fundamental forces of nature. He reflects on the path that brought him to Harvard Griffin GSAS, how teaching shaped his intellectual growth, and why sometimes you have to simplify to see the truth.

A Spark in High School

I have always been fascinated by physics. Neither of my parents are scientists—they both work in finance—but I remember the precise moment I decided I wanted to study physics seriously. I was in high school, learning about Newton’s laws, and it just clicked. The idea that you could predict how an object would move simply by writing down an equation—that was magical to me. I thought, This is what I want to do for the rest of my life.

I grew up in Zurich, Switzerland, but I attended a French-speaking school and speak both French and German. My undergraduate and master’s studies were at EPFL—the École Polytechnique Fédérale de Lausanne—where I focused on high-energy physics. Toward the end of my program, I began working with a professor who encouraged me to broaden my horizons. He had done part of his career in the United States and suggested I look into doctoral programs there.

At the time, I was not certain I wanted to pursue a PhD immediately. I took a job in private equity, building machine learning models. It was a very different kind of work, but ultimately formative. When I came across Professor Matthew Schwartz at Harvard and saw that he was integrating machine learning with theoretical physics, I knew I had found something special. I submitted my application a day before the deadline.

Finding Cracks in the Standard Model

My work today falls into two broad areas. The first is more traditional theoretical physics: I study quantum field theory, which seeks to describe three of the four fundamental forces of nature using the standard model of physics. At particle colliders, such as CERN’s Large Hadron Collider, physicists smash particles together and study the results. My job is to predict those outcomes using mathematical tools.

That may sound straightforward, but the math is anything but. The calculations often rely on approximations that lead to divergent perturbation series—on the surface, a completely undefined result. My research focuses on investigating the deep mathematical structure of these problems and extracting useful insights, even from seemingly intractable expressions.

The other side of my work is what excites me most: using artificial intelligence for symbolic computation.

We are used to applying machine learning to images or language, but it can also be applied to equations. I have worked on projects in which enormously complicated expressions—sometimes running 20 pages or more—were simplified by an AI model, in some cases down to a single line. Large language models, it turns out, are surprisingly good at this. They can handle mathematical language much as they manage natural language.

What is exciting is that these models see the data differently than we do. Humans tend to interpret things in two or three dimensions, but AI can process patterns in hundreds of dimensions. This means a model might recognize something unusual—for instance, a blip in the experimental data that looks unremarkable to us but turns out to be evidence of new physics.

Why does this matter? The standard model of physics is powerful, but we know it is incomplete. Our experiments are becoming ever more precise—so precise, in fact, that theory can no longer keep pace using traditional methods. We need better tools to calculate, simulate, and test our predictions. Machine learning can help us do that. It is not about replacing physicists; it is about expanding what we are capable of seeing and doing.

And if we do find an inconsistency—something the standard model cannot explain—that could be the first hint of something entirely new.

Lessons in Simplicity

My advisor, Professor Matthew Schwartz, has been instrumental in shaping the way I approach research. One thing he told me early on has stayed with me: If your problem is a good problem, you should be able to understand it with a toy model. In other words, do not get lost in the complexity: simplify, build intuition, return to the basics.

At first, it felt strange to revisit problems that were already in textbooks. Why redo something that has already been solved? But time and again, I have found that when you dig into the fundamentals, you discover gaps in the logic, overlooked assumptions, and opportunities for genuine insight. Sometimes the most surprising discoveries arise from the simplest questions.

The Joy of Teaching

For all the excitement of research, one of the most meaningful aspects of my time at Harvard has been teaching. I have taught courses in undergraduate classical mechanics as well as graduate quantum field theory, and the experience has been deeply rewarding.

There is a special clarity that comes from teaching a subject. You think you understand something—until you must explain it to someone else. That is when the gaps reveal themselves. But when you see that lightbulb go on in a student’s eyes, when they truly grasp the concept, it is incredibly fulfilling.

In research, it can take years to see the impact of your work. In the classroom, the rewards are immediate. I think that is something I will carry with me: the sense that knowledge is not only about discovery but also about sharing what you have discovered with others—and helping them see the beauty in it too.

Aurélien Dersy’s research has been supported by the National Science Foundation (NSF) under the Cooperative Agreement PHY-2019786 (The NSF AI Institute for Artificial Intelligence and Fundamental Interactions).

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast