Just Data

How algorithms go bad–and how they can be saved

Earlier this year, Facebook feeds filled with selfies alongside works of art, matched through the Google Arts and Culture app. While many enjoyed connecting themselves to famous portraits by Renoir or Rembrandt, others raised privacy concerns, citing the dangers associated with facial recognition software.

Still others pointed out that the dataset of art consisted mainly of Western, and predominately male, works, meaning that for persons of color, limited matches existed—some of which were inappropriate or offensive. Because the underlying data wasn’t inclusive, the facial recognition algorithm was biased. But apart from the obvious insult, the fact that the algorithm didn’t work in an optimal way isn’t that big a deal, is it?

“Nobody’s losing their job because the wrong art is attached to a picture,” says Cathy O’Neil, PhD ’99, who discusses algorithms and their unintended consequences through her blog mathbabe.org. “But first of all, it’s insulting. Second of all, it’s careless. And third, it’s emblematic of what is happening with less visible algorithms making more important calculations.” O’Neil hopes that those who are paying attention to the fallout from the Google Arts and Culture app will ask themselves, if public-facing algorithms are this bad and so carelessly put into production, should we worry about algorithms that operate without scrutiny?

Training Ground

Over time, more and more industries, impressed by the potential of Big Data, are relying on algorithms to do the work that used to be done by humans. Similar to the arts and culture app, the data used to train these algorithms can be equally biased: O’Neil calls these types of algorithms “Weapons of Math Destruction.” “What makes something a weapon of math destruction is a combination of three factors,” she explains. “It’s important, it’s secret, and it’s destructive.”

Before we talk about what can make an algorithm bad, let’s consider when it works well. Take Moneyball, for example, the bestselling book turned movie about the Oakland Athletics baseball team’s analytical approach to player selection. By reviewing player statistics instead of relying on a scout’s gut feeling about a player’s potential, the organization was able to assemble a team that performed at the highest level. What made their algorithm not a weapon of math destruction was that they updated their data as new player statistics came in, enabling them to refine their results and confirm whether the player they’d picked met their prediction. The algorithm was able to learn, becoming a better predictor of which players would be most effective.

Not all algorithms are retrained using continuously updated data. The job market is one such example. In addition to using algorithms to evaluate resumes, many employers also look at applicants’ credit scores, assuming that a higher score will correlate with better employee behavior. Auto insurance quotes operate on the same assumption, meaning that someone with a perfect driving record but a lower credit score may end up paying hundreds of dollars more a year than someone with a poor record and excellent credit. It isn’t only that low credit scores can occur for a number of reasons wholly unconnected with employee behavior or safe driving: it’s that a mechanism to gauge whether these attributes accurately predicted the assumption doesn’t exist. And while the person involved will never know the real reason they weren’t called for an interview or learn that their insurance quote was higher than someone else’s, the company using the algorithm also has no idea whether they missed out on the best employee for the job or if they are charging a great driver more than a poor one. The algorithm doesn’t learn. The data is biased.

Detective Work

At the Harvard John A. Paulson School of Engineering and Applied Sciences, researchers are determining how to identify and—perhaps—correct for bias.

“One of the issues researchers have identified is that machine learning algorithms can reflect societal biases that exist in the data used to train these algorithms,” says Flavio Calmon, an assistant professor of electrical engineering. “This is particularly important because many of these algorithms are increasingly applied at the level of the individual, for example in recidivism prediction, loan approval, and hiring decisions.”

While algorithms aren’t creating outcomes that directly discriminate by race or by gender, they often use data containing proxies for those attributes, for example, income or education level. Because these proxies can correlate strongly, the eventual output will still be discriminatory.

How the data is collected is key. A person of color living in a poor neighborhood, for example, is more likely to be stopped by police than, say, a white person in a more affluent area. If police find evidence of a crime, however minor, that person is likely to end up with a record while the more affluent person is either not investigated or let go. The data about who was arrested, for what, and where is used to inform algorithms designed to determine the probability that others arrested will go on to commit another crime—known as recidivism risk. And here’s where the bias comes in: If the data collected is predominately people of color living in poorer neighborhoods, then the algorithm will assume through proxies that a person of color living in that neighborhood will offend again. Intentional or not, the data is reflecting racial bias.

What makes something a weapon of math destruction is a combination of three factors. It's important, it's secret, and it's destructive.

-Cathy O'Neil, PhD '99

The nonprofit news organization ProPublica investigated how recidivism risk assessments are made throughout the country, focusing on risk scores given to individuals in Broward County, Florida, and tracking whether they later reoffended. While the software used to develop the risk scores does not take race into account, what the reporters found was shocking: Black defendants were overreported as likely to re-offend while white defendants were underreported as recidivism risks. Something in the data was serving as a proxy for race, a phenomenon known as disparate impact.

“It’s a matter of detecting that a problem exists,” says Calmon. “For example, you use an algorithm that outputs recidivism predictions and notice that the quality of the recidivism prediction is different for different races. Because you know that race shouldn’t be an input to the algorithm, you have to determine which attributes used by the algorithm are discriminating on race.”

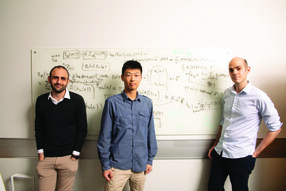

Hao Wang, a graduate student in Calmon’s lab, has been working with Calmon and Berk Ustun, a postdoctoral fellow, to determine a way to address the issue. Wang is the lead author of “On the Direction of Discrimination: An Information-Theoretic Analysis of Disparate Impact in Machine Learning,” a paper co-authored with Calmon and Ustun. “Our knowledge of information theory inspired us to take on this project,” Wang explains. “In this paper, we are using tools from information theory to understand prominent disparate impact.” These are decades old and never before used for this purpose.

With the tools, Wang developed a way to identify the proxies creating the disparate impact by creating a correction function. He successfully tested the function by utilizing ProPublica’s dataset. The method is a major step forward in developing a tool to correct the training data, mitigate discrimination, and reduce disparate impact.

“Having well-founded auditing tools to detect disparate impact and explain how it occurs helps us make informed decisions as to whether an algorithm should be used,” says Ustun. “Even if you can’t correct it, you can stop using that algorithm and build one that mitigates disparate impact.”

As mechanics, we have an important role in pointing out when an algorithm isn't fair.

-Professor Flavio Calmon

The Mechanics

Calmon, Ustun, and Wang stress that while their work is important, it is only part of the solution. Discussions surrounding algorithms—how they are trained and how they are used—are very important and must involve people beyond computer science. “We’re more like the mechanics that build the engine in the car,” says Calmon. “Our role is to make sure that the algorithms and the mathematical tools that underlie it influence that discussion.”

Ustun believes that it is important for computer scientists to add their voices to the discussion. “Given that algorithms are used to make important decisions,” he says, “as mechanics, we have an important role in pointing out when an algorithm isn’t fair, when it shouldn’t be used for a particular decision.”

But ultimately, the issues are larger than those who study algorithms. “The definitions of discrimination and fairness are not in the hands of computer scientists or engineers,” says Calmon. “This should be discussed together with those in the legal sphere, and in the social sciences, philosophy, and so on.” He hopes that the methods he, Ustun, and Wang are developing will uncover proxies for discrimination and serve as an auditing tool, so that the output of an algorithm could be analyzed by experts and understood in a broader context.

O’Neil agrees about the importance of a broader conversation. In 2017, she wrote an op-ed for The New York Times in which she called on academia to take the lead in studying how technology is affecting our lives by bringing multiple stakeholders together to discuss and recommend solutions. By necessity, such an interdisciplinary effort must involve the creation of a new academic discipline dedicated to algorithmic accountability.

“It’s a really complicated, messy conversation and one solution won’t fit every problem,” she says. “But it absolutely must involve the computer scientists who build these algorithms. So, the answer is, it cannot happen in academia as it stands, but it must happen in academia— because it’s not going to happen anywhere else.”

Photo by Mark Ostow, Illustration by John Hersey

Get the Latest Updates

Join Our Newsletter

Subscribe to Colloquy Podcast

Simplecast